I was having an issue with Visual Studio 2012 hanging on startup, but while trying to isolate the source of the problem, I could only get Visual Studio to start in safe mode (using devenv.exe /safemode). In that mode, you can’t disable any extensions through the GUI. In the end, I started poking around in the registry and found a key at HKCU\Software\Microsoft\VisualStudio\11.0\ExtensionManager named “EnabledExtensions”. Deleting all the values under that key disabled all the extensions, enabling me to enable them individually and track down the problem extension.

Sporadic Thoughts of a Software Developer

Sunday, February 9, 2014

Monday, November 18, 2013

Accessing 64-bit Registry Keys from 32-bit Powershell

On my current project we’re using SolutionScripts to perform automated initialization of the development environment. One of our steps restores a SQL Server database from a provided backup filename. However, the Powershell script that performs the restore looks in the registry to determine the default SQL Server locations for Data and Log files (this script was previously used on a build server where we had put the data and log files on separate physical drives for performance reasons).

The problem is that the Package Manager Console window in Visual Studio runs in 32-bit mode, while SQL Server is a 64-bit application and thus its registry entries are not accessible. Thus, the following command fails to read the desired value:

$dataPath = gi "HKLM:\SOFTWARE\Microsoft\Microsoft SQL Server\$instanceName\MSSQLServer" | %{$_.GetValue("DefaultData")}One possible solution would be to use Invoke-Command to effectively execute the statement in a 64-bit process, like so:

$dataPath = Invoke-Command -ComputerName localhost -ScriptBlock {gi "HKLM:\SOFTWARE\Microsoft\Microsoft SQL Server\$instanceName\MSSQLServer" | %{$_.GetValue("DefaultData")}}The problem with this approach is that it is using Powershell Remoting and requires that each developer execute the following command as Administrator: Enable-PsRemoting -Force

Anytime I have to execute something as an Administrator and use the -Force flag (or confirm through a bunch of prompts to explicitly change system settings), a shiver goes up my spine.

Powershell 2.0 Solution

I pieced together a solution that works with Powershell 2.0 using the following tidbits:

- While the out-of-the-box APIs prior to .NET 4.0 do not give you the ability to read the 64-bit registry from a 32-bit process, the Windows API does support it.

- With a little help from pinvoke.net you can piece together the import definitions in C# to make the necessary Windows API calls.

- With a little help from LINQPad (and plenty of tinkering and process crashes), you can get the allocation and releasing of memory buffers just right to successfully read 64-bit registry values from a 32-bit process.

- With the use of the Add-Type commandlet in Powershell, you can actually make an embedded C# class available to your scripts.

Here’s the C# source:

using Microsoft.Win32;

using System;

using System.Text;

using System.Runtime.InteropServices;

namespace Dlp

{

public static class Registry

{

static UIntPtr HKEY_LOCAL_MACHINE = new UIntPtr(0x80000002u);

const int KEY_WOW64_64KEY = 0x100;

const int KEY_ALL_ACCESS = 0xf003f;

const int KEY_QUERY_VALUE = 0x0001;

const int RegSz = 0x00000002;

const int RegMultiSz = 7;

// Define other methods and classes here

[DllImport("advapi32.dll")]

static extern int RegOpenKeyEx(

UIntPtr hKey,

string subKey,

int options,

int sam,

out UIntPtr phkResult );

/* Retrieves the type and data for the specified registry value. */

[DllImport("Advapi32.dll", EntryPoint = "RegGetValueW", CharSet = CharSet.Unicode, SetLastError = true)]

internal static extern long RegGetValue(

UIntPtr hkey,

string lpSubKey,

string lpValue,

int dwFlags,

out int pdwType,

IntPtr pvData,

ref uint pcbData);

public static string GetRegistryStringValue(string key, string subkey, string valueName)

{

UIntPtr hkey = UIntPtr.Zero;

RegOpenKeyEx(HKEY_LOCAL_MACHINE, key, 0, KEY_WOW64_64KEY | KEY_ALL_ACCESS, out hkey);

int pdwType = 0;

IntPtr pvData = IntPtr.Zero;

uint pcbData = 0;

// Determine necessary buffer length

RegGetValue(hkey, subkey, valueName, RegSz, out pdwType, pvData, ref pcbData);

// If there's no data available...

if (pcbData == 0)

return null;

// Allocate the correctly sized buffer

pvData = Marshal.AllocHGlobal((int) pcbData);

RegGetValue(hkey, subkey, valueName, RegSz, out pdwType, pvData, ref pcbData);

// Copy unmanaged buffer to a byte array

byte[] ar = new byte[pcbData-2]; // Trim trailing null character from buffer

Marshal.Copy(pvData, ar, 0, ar.Length);

// Free the unmanaged buffer

Marshal.FreeHGlobal(pvData);

// Convert the unicode bytes to a string

string value = Encoding.Unicode.GetString(ar);

return value;

}

}

}

The code makes use of the RegOpenKeyEx and RegGetValue Windows API functions, along with the ever so important KEY_WOW64_64KEY option flag which is what actually provides the 64-bit registry access. Beyond that, there’s some boring (but very dangerous) buffer management and unicode string encoding (boy, if I had a nickel for every time I crashed LINQPad while…)

With the C# function written and nicely packaged up in a namespace and a static class, it’s time to make it available to Powershell environment. I simply added a function to my database management Powershell module that looks like this:

Function Define-GetRegistryStringValue () {

$sourceCode = @"

using Microsoft.Win32;

using System;

using System.Text;

using System.Runtime.InteropServices;

namespace Dlp

{

public static class Registry

{

static UIntPtr HKEY_LOCAL_MACHINE = new UIntPtr(0x80000002u);

....

public static string GetRegistryStringValue(string key, string subkey, string valueName)

{

UIntPtr hkey = UIntPtr.Zero;

RegOpenKeyEx(HKEY_LOCAL_MACHINE, key, 0, KEY_WOW64_64KEY | KEY_ALL_ACCESS, out hkey);

....

// Convert the unicode bytes to a string

string value = Encoding.Unicode.GetString(ar);

return value;

}

}

}

"@

Add-Type -TypeDefinition $sourceCode

}

Define-GetRegistryStringValue

I probably could have defined this more succinctly with just a string variable and an inline call to Add-Type, but this worked and I had spent enough time on the problem. With the code now available to Powershell, my updated call to read the registry (which works in both 64-bit and 32-bit modes) looks like this: $dataPath = [Dlp.Registry]::GetRegistryStringValue("SOFTWARE\Microsoft\Microsoft SQL Server\$instanceName\MSSQLServer", $null, "DefaultData")

Powershell 3.0+ Solution

In .NET 4.0, Microsoft introduced the RegistryView enumeration. This enables 32-bit processes to read 64-bit values from .NET, and so it significantly simplifies the solution. Here is a Powershell function that provides the same behavior:

Function Get-RegistryValue64([string] $keyPath, [string] $valueName) {

$hklm64 = [Microsoft.Win32.RegistryKey]::OpenBaseKey([Microsoft.Win32.RegistryHive]::LocalMachine, [Microsoft.Win32.RegistryView]::Registry64);

$key = $hklm64.OpenSubKey($keyPath);

if ($key) {

return $key.GetValue($valueName)

} else {

return $null

}

}

Sunday, November 10, 2013

Determine the Exact Position of an XmlReader

I was dealing with a scenario today where I wanted to read through an XML file capturing the locations of various elements so that I could come back on a second pass and process the file using random access. To construct the XmlReader, I first created a StreamReader on the file and then passed that to the XmlReader.Create method to get my reader.

Initially I was looking at the StreamReader’s BaseStream property, but that stream reports the Position in increments of 1024 bytes due to the buffering behavior of the StreamReader. I poked around a bit looking for a solution and when I couldn’t find one I decided to try and roll my own.

Here is what I came up with, but be warned that your mileage may vary because I’m doing non-future-proof things like reflecting on private properties of the .NET Framework classes.

The key to the approach is that the XmlReader is backed by a StreamReader which itself is backed by a FileStream. Having access to the underlying FileStream gives us visibility into how much of the file has been read into the internal buffers of the XmlReader and the StreamReader .

Since the XmlReader uses an internal buffer of 4096 bytes, when it is first initialized from the StreamReader, it will read in the first 4096 bytes to fill its buffer. Since the StreamReader uses an internal buffer of 1024 bytes, the XmlReader’s initialization activities will force it to retrieve four chunks of 1024 bytes. With its own internal buffer exhausted, it will then read ahead in the FileStream another 1024 bytes.

The difficulty comes in determining where in the original file the XmlReader is positioned at any given moment since there are no public properties that report that information. As it turns out, we can calculate it by reading a few additional private fields on the StreamReader and XmlReader implementation classes. The basic formula looks (almost) like this:

Actual XmlReader Position =

FileStream Position – StreamReader Buffer Size – XmlReader Buffer Size

+ XmlReader Buffer Position + StreamReader Buffer Position

Here’s the code for the XmlReader extension method:

public static class XmlReaderExtensions

{

private const long DefaultStreamReaderBufferSize = 1024;

public static long GetPosition(this XmlReader xr, StreamReader underlyingStreamReader)

{

// Get the position of the FileStream

long fileStreamPos = underlyingStreamReader.BaseStream.Position;

// Get current XmlReader state

long xmlReaderBufferLength = GetXmlReaderBufferLength(xr);

long xmlReaderBufferPos = GetXmlReaderBufferPosition(xr);

// Get current StreamReader state

long streamReaderBufferLength = GetStreamReaderBufferLength(underlyingStreamReader);

int streamReaderBufferPos = GetStreamReaderBufferPos(underlyingStreamReader);

long preambleSize = GetStreamReaderPreambleSize(underlyingStreamReader);

// Calculate the actual file position

long pos = fileStreamPos

- (streamReaderBufferLength == DefaultStreamReaderBufferSize ? DefaultStreamReaderBufferSize : 0)

- xmlReaderBufferLength

+ xmlReaderBufferPos + streamReaderBufferPos - preambleSize;

return pos;

}

#region Supporting methods

private static PropertyInfo _xmlReaderBufferSizeProperty;

private static long GetXmlReaderBufferLength(XmlReader xr)

{

if (_xmlReaderBufferSizeProperty == null)

{

_xmlReaderBufferSizeProperty = xr.GetType()

.GetProperty("DtdParserProxy_ParsingBufferLength",

BindingFlags.Instance | BindingFlags.NonPublic);

}

return (int) _xmlReaderBufferSizeProperty.GetValue(xr);

}

private static PropertyInfo _xmlReaderBufferPositionProperty;

private static int GetXmlReaderBufferPosition(XmlReader xr)

{

if (_xmlReaderBufferPositionProperty == null)

{

_xmlReaderBufferPositionProperty = xr.GetType()

.GetProperty("DtdParserProxy_CurrentPosition",

BindingFlags.Instance | BindingFlags.NonPublic);

}

return (int) _xmlReaderBufferPositionProperty.GetValue(xr);

}

private static PropertyInfo _streamReaderPreambleProperty;

private static long GetStreamReaderPreambleSize(StreamReader sr)

{

if (_streamReaderPreambleProperty == null)

{

_streamReaderPreambleProperty = sr.GetType()

.GetProperty("Preamble_Prop",

BindingFlags.Instance | BindingFlags.NonPublic);

}

return ((byte[]) _streamReaderPreambleProperty.GetValue(sr)).Length;

}

private static PropertyInfo _streamReaderByteLenProperty;

private static long GetStreamReaderBufferLength(StreamReader sr)

{

if (_streamReaderByteLenProperty == null)

{

_streamReaderByteLenProperty = sr.GetType()

.GetProperty("ByteLen_Prop",

BindingFlags.Instance | BindingFlags.NonPublic);

}

return (int) _streamReaderByteLenProperty.GetValue(sr);

}

private static PropertyInfo _streamReaderBufferPositionProperty;

private static int GetStreamReaderBufferPos(StreamReader sr)

{

if (_streamReaderBufferPositionProperty == null)

{

_streamReaderBufferPositionProperty = sr.GetType()

.GetProperty("CharPos_Prop",

BindingFlags.Instance | BindingFlags.NonPublic);

}

return (int) _streamReaderBufferPositionProperty.GetValue(sr);

}

#endregion

}

Saturday, May 4, 2013

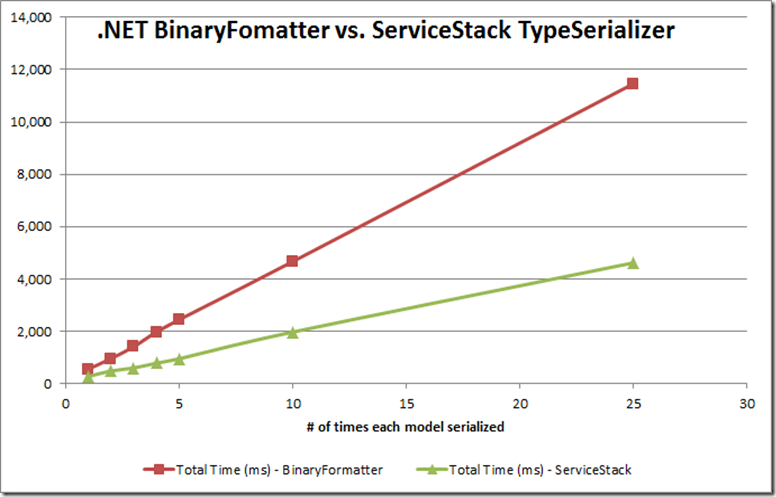

A Performance Comparison of the .NET BinaryFormatter and the ServiceStack TypeSerializer

I have recently been doing some performance profiling on our web application and one of the areas that showed up as somewhat significant was our data serialization. Our application uses a caching mechanism where on every request, every view model is either serialized to cache after it is built, or it is deserialized from cache in lieu of rebuilding it. Our initial implementation used the .NET BinaryFormatter. Given the amount of runtime execution traffic that goes through the serializer, I decided to take a closer look at optimizing it.

I first attempted to use protobuf-net, but due to the the use of dynamics and “object” types in some of our models, I decided the process to get it to work was too invasive to pursue. Instead, I converted our serialization to use ServiceStack’s TypeSerializer. On their wiki, they show the following results:

| Serializer | Size | Performance |

| Microsoft DataContractSerializer | 4.68x | 6.72x |

| Microsoft JsonDataContractSerializer | 2.24x | 10.18x |

| Microsoft BinaryFormatter | 5.62x | 9.06x |

| NewtonSoft.Json | 2.30x | 8.15x |

| ProtoBuf.net | 1x | 1x |

| ServiceStack TypeSerializer | 1.78x | 1.92x |

Their table baselines the performance of all the serializers against protobuf-net, however since I am only comparing BinaryFormatter to ServiceStack TypeSerializer, you can translate their numbers to look like this:

| Serializer | Size | Performance |

| Microsoft BinaryFormatter | 3.16x | 4.72x |

| ServiceStack TypeSerializer | 1x | 1x |

I ran all of our web application view models (178 different classes) through the two serializers. Each timing in the chart below represents the total time to just serialize and deserialize each model “n” times (where n={1, 2, 3, 4, 5, 10, 25, 50, 100, 250, 500}), and were performed using “Release” builds of all classes.

The first chart shows how ServiceStack’s serializer gets off to a slow start, but surpasses the BinaryFormatter in performance at a certain point.

Focusing in on the performance for the first 100 serializations of each model, you can see the break even point on my data set is after about 40th serialization.

As the number of repeat serializations increase, the performance of ServiceStack’s serializer settles in at taking about 42% of the time, and is consistently (within my test model data) producing byte arrays that are 24% of the size of those produced by the BinaryFormatter.

To state my results in the same format as seen on ServiceStack’s wiki, you would see the following:

| Serializer | Size | Performance |

| Microsoft BinaryFormatter | 4.22x (vs. 3.16x) | 2.27x (vs. 4.72x) |

| ServiceStack TypeSerializer | 1x | 1x |

As you can see, I am not seeing nearly the performance increase I had been hoping for, based on their published results. I decided to run some additional tests where I pre-warmed the serializers (serialized one of each of the models before starting the timings). The results were certainly more favorable to ServiceStack, and it serialized my data about 2.62x faster than the BinaryFormatter over the 500 serializations, but still nowhere near their performance numbers.

Based on these results, I think it is indeed worth using the ServiceStack TypeSerializer if you anticipate that you’re going to serialize and deserialize the same model types many times over. For even better performance, prepare the serializer by processing each serializable type as part of your application startup activities, but temper your expectations. Performance is good, but not quite what you might be hoping for.

Thursday, December 30, 2010

Reinitializing your Powershell environment without closing and reopening

I’ve been running our builds with psake, and have found that I get errors if I try to run a build script twice in the same Powershell session. I looked around a bit about how to reset your Powershell environment without closing and reopening a Powershell console window, but couldn’t find anything.

Here’s what I came up with that works for me.

Remove-Variable * -ErrorAction SilentlyContinue; Remove-Module *; $error.Clear(); Clear-Host

And now, the short version:

rv * -ea SilentlyContinue; rmo *; $error.Clear(); cls

Setting dynamic connection string for dtexec.exe in Powershell

This one had me banging my head on the wall and hitting Google hard until I just took a step back and tried to get inside of Powershell’s head.

Basically I have a Powershell-based build script that (among other things) needs to execute an SSIS package that initializes a database from an Excel spreadsheet, and at the end of the build process deploys a web application to IIS using the Powershell WebAdministration module.

But here’s the catch: the Excel data support in SSIS only works in 32-bit mode and the WebAdministration module only works in 64-bit mode. So what I need to do is launch the 32-bit version of the dtexec.exe utility from my 64-bit Powershell script. Seems easy enough, right? Nope.

After struggling for a while in Powershell, I switched to the good old Windows Command Prompt and managed to get it working fairly quickly using the following syntax:

"C:\Program Files (x86)\Microsoft SQL Server\100\DTS\Binn\DTExec.exe"

/File "C:\Path\To\My\Package\Import Types.dtsx"

/Conn Connection1;"Provider=SQLNCLI10;Server=MYSERVER;Database=DB_ONE;Uid=USERNAME;Pwd=PASSWORD;"

/Conn Connection2;"Provider=SQLNCLI10;Server=MYSERVER;Database=DB_TWO;Uid=USERNAME;Pwd=PASSWORD;"

/Set \package.variables[User::ExcelFilePath].Value;"C:\Path\To\My\Data\Import Types.Data.xls"

If you just try to slap a “&” on the front and execute it in Powershell, it doesn’t work. I tried many variations until I arrived at this particular translation:

& "C:\Program Files (x86)\Microsoft SQL Server\100\DTS\Binn\DTExec.exe"

/File "C:\Path\To\My\Package\Import Types.dtsx"

/Conn "Connection1;`"Provider=SQLNCLI10;Server=MYSERVER;Database=DB_ONE;Uid=USERNAME;Pwd=PASSWORD;`""

/Conn "Connection2;`"Provider=SQLNCLI10;Server=MYSERVER;Database=DB_TWO;Uid=USERNAME;Pwd=PASSWORD;`""

/Set "\package.variables[User::ExcelFilePath].Value;C:\Path\To\My\Data\Import Types.Data.xls"

I’ve tried to make complete sense of why that particular formatting works, but I can’t. Here’s a couple of observations about the Powershell version:

- The argument values need to be wrapped in quotes in their entirety.

- The quotes found in the Command Prompt version need to be escaped in Powershell in order for DTExec to receive them correctly.

But that second observation is only almost true. The /Set argument only works if you drop the embedded quotes altogether. When I tried retaining them from the Command Prompt version and escaping them for inclusion in the Powershell, I got an error. Dropping the quotes altogether worked, despite the embedded space in the file name. At this point, however, I’m not arguing!

Tuesday, September 9, 2008

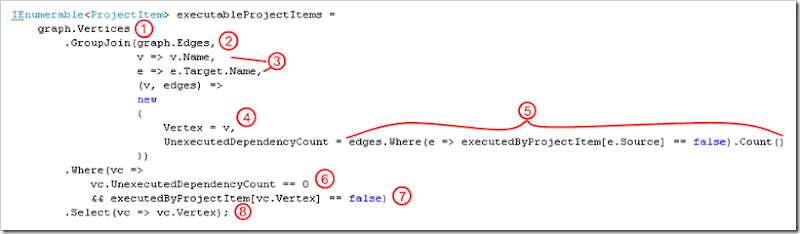

Implementing a Breadth-First Search with LINQ

I was working on some code today that will manages the order of execution of some ETL jobs based on some known dependency information. I've gathered the dependency information into a directed graph that I created using the QuickGraph library. In order to prevent jobs from executing before their dependencies, I need to process the nodes (jobs) of the graph in a breadth-first manner. For my unit test fixtures I have created the following directed graph:

When I tried using the built-in breadth first search implementation, it visited the nodes in the following order 1, 3, 2, 5, and 4. The problem is that it assumes a single root and does not look at the fact that 5 depends on 4, which hasn't run yet. As I was digging around a bit with the .NET Reflector as to whether I should try implementing my own breadth-first search algorithm, I began thinking that there would probably be an easier solution possible with LINQ. The highest number of vertices that I'm going to be dealing with at once is about 100, so performance really isn't much of a concern.

Note: I should mention that the vertices of the graph represent my jobs, which I originally was referring to as "Project Items". In the code and comments below, these are all synonymous. Eric Evans would disappointed in me for my lack of a ubiquitous language.

I started by initializing a Dictionary to track whether or not a particular job has been executed yet (starting with "false"):

// Initialize dictionary to track execution of all verticesDictionary<ProjectItem, bool> executedByProjectItem = graph.Vertices.ToDictionary(v => v, e => false);

Then I loop until they've all been executed:

// Keep looping while some jobs have not been executedwhile (executedByProjectItem.Values.Any(e => !e)){...}

Inside the loop, the first thing I want to do is get a list of all the vertices (ProjectItems) that can currently be executed. To get this list:

- I start with the graph's vertices and ...

- I perform a join to the edges...

- ...where the vertex name matches the edge's target vertex's name.

The GroupJoin method provides a collection of edges for each vertex in my graph. - From that join, I create a new anonymous type that contains a reference to the vertex,

- ...and then a count of the related edges for which the source job has yet to execute.

- With that calculation in hand, I filter the list down to just those vertices that have no unexecuted dependencies

- ...and have not already been executed themselves (root vertices would otherwise continue to be executed since they will always have an UnexecutedDependencyCount of 0).

- In the final step, I use the Select projection method to create a return set of IEnumerable<ProjectItem> instead of the anonymous type, since that's what I'm really interested in.

The final step of the loop is to just iterate through all the jobs that can be executed, and uh... execute them.

When I ran this code, the output looked like this:

Executing job: projectItem1Executing job: projectItem3Executing job: projectItem4=== Pass complete ====Executing job: projectItem2Executing job: projectItem5=== Pass complete ====

There is a subtle issue at work here. Based on my query, I should have only seen 1 and 4 in the first pass. Job 3 should have been executed on the second pass, once Job 1 had been executed. However, due to the nature of deferred execution of some operations in LINQ, once Job 1 has been marked as executed, Job 3 just showed up to the party late. It's obviously not wrong, it was just a little unexpected the first time I saw it. To get a static list on the first pass, I could add a call to the ToList() at #8 in the code listing above, which would have iterated through the collection immediately. With the ToList call in place, the code produces the following output:

Executing job: projectItem1Executing job: projectItem4=== Pass complete ====Executing job: projectItem3=== Pass complete ====Executing job: projectItem2=== Pass complete ====Executing job: projectItem5=== Pass complete ====

It's interesting how the deferred execution actually cuts down on the number of times I have to iterate through the jobs to execute them all (from 4 to 2), and because the results will still be correct, I've gone without the ToList in my final copy of the code.

At the end of the day, I am happy with how that worked out. In about 30 lines of code with LINQ, I was able to implement a reliable Breadth First iteration of my directed graph with potentially multiple root nodes.