I have recently been doing some performance profiling on our web application and one of the areas that showed up as somewhat significant was our data serialization. Our application uses a caching mechanism where on every request, every view model is either serialized to cache after it is built, or it is deserialized from cache in lieu of rebuilding it. Our initial implementation used the .NET BinaryFormatter. Given the amount of runtime execution traffic that goes through the serializer, I decided to take a closer look at optimizing it.

I first attempted to use protobuf-net, but due to the the use of dynamics and “object” types in some of our models, I decided the process to get it to work was too invasive to pursue. Instead, I converted our serialization to use ServiceStack’s TypeSerializer. On their wiki, they show the following results:

| Serializer | Size | Performance |

| Microsoft DataContractSerializer | 4.68x | 6.72x |

| Microsoft JsonDataContractSerializer | 2.24x | 10.18x |

| Microsoft BinaryFormatter | 5.62x | 9.06x |

| NewtonSoft.Json | 2.30x | 8.15x |

| ProtoBuf.net | 1x | 1x |

| ServiceStack TypeSerializer | 1.78x | 1.92x |

Their table baselines the performance of all the serializers against protobuf-net, however since I am only comparing BinaryFormatter to ServiceStack TypeSerializer, you can translate their numbers to look like this:

| Serializer | Size | Performance |

| Microsoft BinaryFormatter | 3.16x | 4.72x |

| ServiceStack TypeSerializer | 1x | 1x |

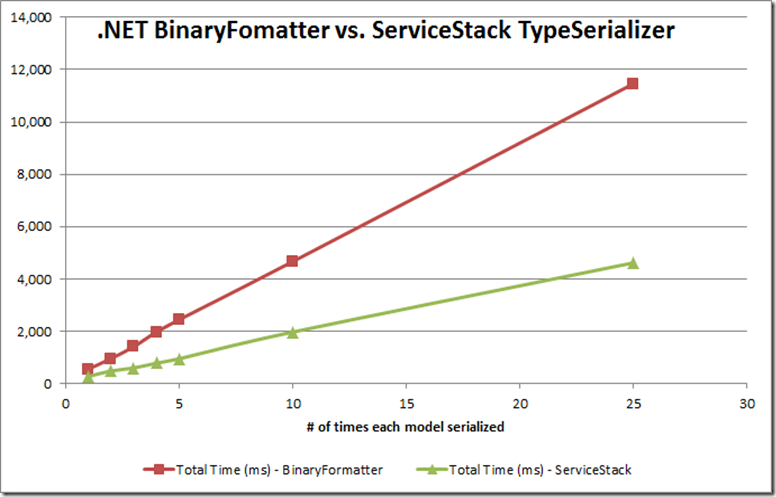

I ran all of our web application view models (178 different classes) through the two serializers. Each timing in the chart below represents the total time to just serialize and deserialize each model “n” times (where n={1, 2, 3, 4, 5, 10, 25, 50, 100, 250, 500}), and were performed using “Release” builds of all classes.

The first chart shows how ServiceStack’s serializer gets off to a slow start, but surpasses the BinaryFormatter in performance at a certain point.

Focusing in on the performance for the first 100 serializations of each model, you can see the break even point on my data set is after about 40th serialization.

As the number of repeat serializations increase, the performance of ServiceStack’s serializer settles in at taking about 42% of the time, and is consistently (within my test model data) producing byte arrays that are 24% of the size of those produced by the BinaryFormatter.

To state my results in the same format as seen on ServiceStack’s wiki, you would see the following:

| Serializer | Size | Performance |

| Microsoft BinaryFormatter | 4.22x (vs. 3.16x) | 2.27x (vs. 4.72x) |

| ServiceStack TypeSerializer | 1x | 1x |

As you can see, I am not seeing nearly the performance increase I had been hoping for, based on their published results. I decided to run some additional tests where I pre-warmed the serializers (serialized one of each of the models before starting the timings). The results were certainly more favorable to ServiceStack, and it serialized my data about 2.62x faster than the BinaryFormatter over the 500 serializations, but still nowhere near their performance numbers.

Based on these results, I think it is indeed worth using the ServiceStack TypeSerializer if you anticipate that you’re going to serialize and deserialize the same model types many times over. For even better performance, prepare the serializer by processing each serializable type as part of your application startup activities, but temper your expectations. Performance is good, but not quite what you might be hoping for.

1 comment:

If you are looking to do some performance tuning, there are a lot of different tools to help you find and optimize ASP.NET performance. I made a great list of 9 different types of tools. Check it out and see if any of these can help: ASP.NET Performance: 9 Types of Tools You Need to Know!

Post a Comment